Vibe Check

Exploring my Claude Code logs for signs of intelligent life.

I've been interviewing a lot recently and that has included take home projects. With AI tooling being as good as it is, interviewers are trying to interpret my actual engineering competency indirectly. Am I able to describe what I've built or how it would improve, am I able to live code a new feature?

Interviewers could probably benefit from reviewing my Claude Code logs. I could probably benefit as well. Let's take a look.

What to look for?

Prompt Quality

As a little exercise try pulling up a previous Claude Code, Codex, or Cursor Agent session and read your prompts, how did you do, what measures of quality do you see? When reading my prompts, specificity is a huge marker of quality. In the initial prompt and in follow-ups, did I refer to components of the architecture or just behaviors of the features? Referring to functions, files, or architectural concepts indicates that I'm actively checking the proposed architecture against my mental model. The appropriate level of abstraction, however, is changing as AI assistants improve. As a term "vibe coding" means operating above this level of abstraction. Two years ago (though the term didn't exist yet) that might have meant not processing every line that was changed through your mental model, last year every function, today every file. People will inevitably disagree on where this line is and can set their own measures of prompt quality accordingly.

Context Management

We've all experienced the degradation of quality when a context window starts to fill up. Avoiding doing any serious planning or coding above 70% context often saves me from headaches. Optimizing context also means diversifying perspectives. By saving a plan in one window and prompting "You are a senior architect who strongly believes in YAGNI, evaluate this plan and asking clarifying questions?" you'll get a fresh and less sycophantic perspective on your plan. Okay that's enough talking, let's explore the Claude Code Logs.

The Data in Claude Code Logs

- Token counts (input, output, cache)

- Message content (text, thinking, tool calls)

- Timestamps, session IDs, model, git branch, etc.

Summary entry:

{

"type": "summary",

"summary": "GitHub Deployment & Vercel Config Fix",

"leafUuid": "bc06c2e6-69c2-451c-b7bb-d68092509e53"

}

User message:

{

"type": "user",

"uuid": "85143832-582f-4067-b5c8-3704a298e35e",

"parentUuid": null,

"sessionId": "48c1ed77-58c8-4f27-9435-8ab849e83946",

"timestamp": "2025-11-11T17:09:44.277Z",

"cwd": "/Users/reed/Code/CodingInterview",

"version": "2.0.37",

"gitBranch": "main",

"message": {

"role": "user",

"content": "okay I'd like to add a new quiz..."

}

}

Assistant message (with thinking & token usage):

{

"type": "assistant",

"uuid": "9d705020-8657-4721-8765-875a11496183",

"parentUuid": "85143832-582f-4067-b5c8-3704a298e35e",

"sessionId": "48c1ed77-58c8-4f27-9435-8ab849e83946",

"timestamp": "2025-11-11T17:09:50.149Z",

"requestId": "req_011CV2UKrY78qKAxjoE4mrXU",

"message": {

"model": "claude-sonnet-4-5-20250929",

"role": "assistant",

"content": [

{

"type": "thinking",

"thinking": "The user wants to add a new quiz type where...",

"signature": "EtoFCkYICRgC..."

},

{

"type": "text",

"text": "I'll help you implement..."

},

{

"type": "tool_use",

"id": "toolu_123",

"name": "Read",

"input": { "file_path": "/path/to/file.ts" }

}

],

"stop_reason": "tool_use",

"usage": {

"input_tokens": 9,

"output_tokens": 376,

"cache_creation_input_tokens": 12325,

"cache_read_input_tokens": 12249,

"service_tier": "standard"

}

}

}

The key structure is that each line is a complete JSON object with type indicating what kind of entry it is (user, assistant, summary, file-history-snapshot). Messages are linked via uuid/parentUuid.

What I've found

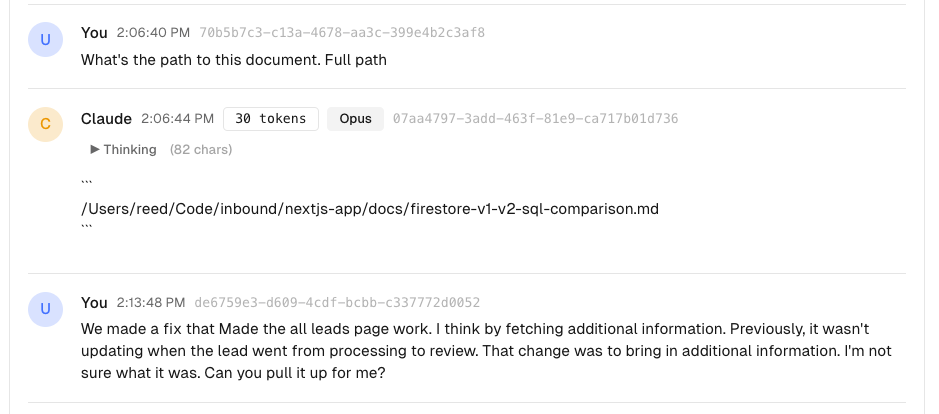

1. New topics in open windows

This shows me using a window that I had been planning in for debugging a completely new topic.

2. Pushing context windows too far I generally feel that if the context window is compacting that I've been operating within a degrading context window for far too long. I plan on measuring this but haven't gotten there.

More to come

I've built a little GUI tool to help me dig deeper into my cloud logs and I'll share more when I've done some more exploring.